AI in 2026: From Tool to Active Participant

The most important takeaway from CES 2026 wasn’t a single product or demo; it was the shift in how AI is being used. AI is no longer just a tool that analyzes or recommends. It’s becoming an active interface that can see, reason and act in the physical world.

For CEOs, this highlights a real strategic pivot. The next wave of advantage won’t come from experimenting with AI; it will come from operationalizing physical AI and agentic AI inside core systems. It will come from how AI is executed.

What CES 2026 Made Clear

Several themes dominated conversations on the show floor:

Physical AI

This was the breakout term of CES. Physical AI refers to models trained in simulated environments and then deployed into machines such as robots, vehicles and industrial systems that operate in the real world. Unlike traditional software, these systems must interpret physical constraints, uncertainty and motion in real time.

Agentic AI

Agentic systems can reason, plan, use tools and execute multi-step tasks autonomously. They don’t just respond to prompts; they pursue goals. We no longer have to train an AI model to know everything on day one. AI is transitioning from a reactive assistant to an active problem solver.

Edge AI and Local Processing

AI is moving off the cloud and closer to where decisions are made. Chips from Nvidia, AMD, Intel and Qualcomm are enabling inference and learning at the edge, cutting latency, improving privacy and enabling AI to function even when connectivity is limited.

Humanoid and Industrial Robotics

Robots are moving from demos to deployment. Boston Dynamics’ Atlas, slated for use in Hyundai EV factories, signaled that humanoid robots are becoming practical tools; they aren’t science fiction.

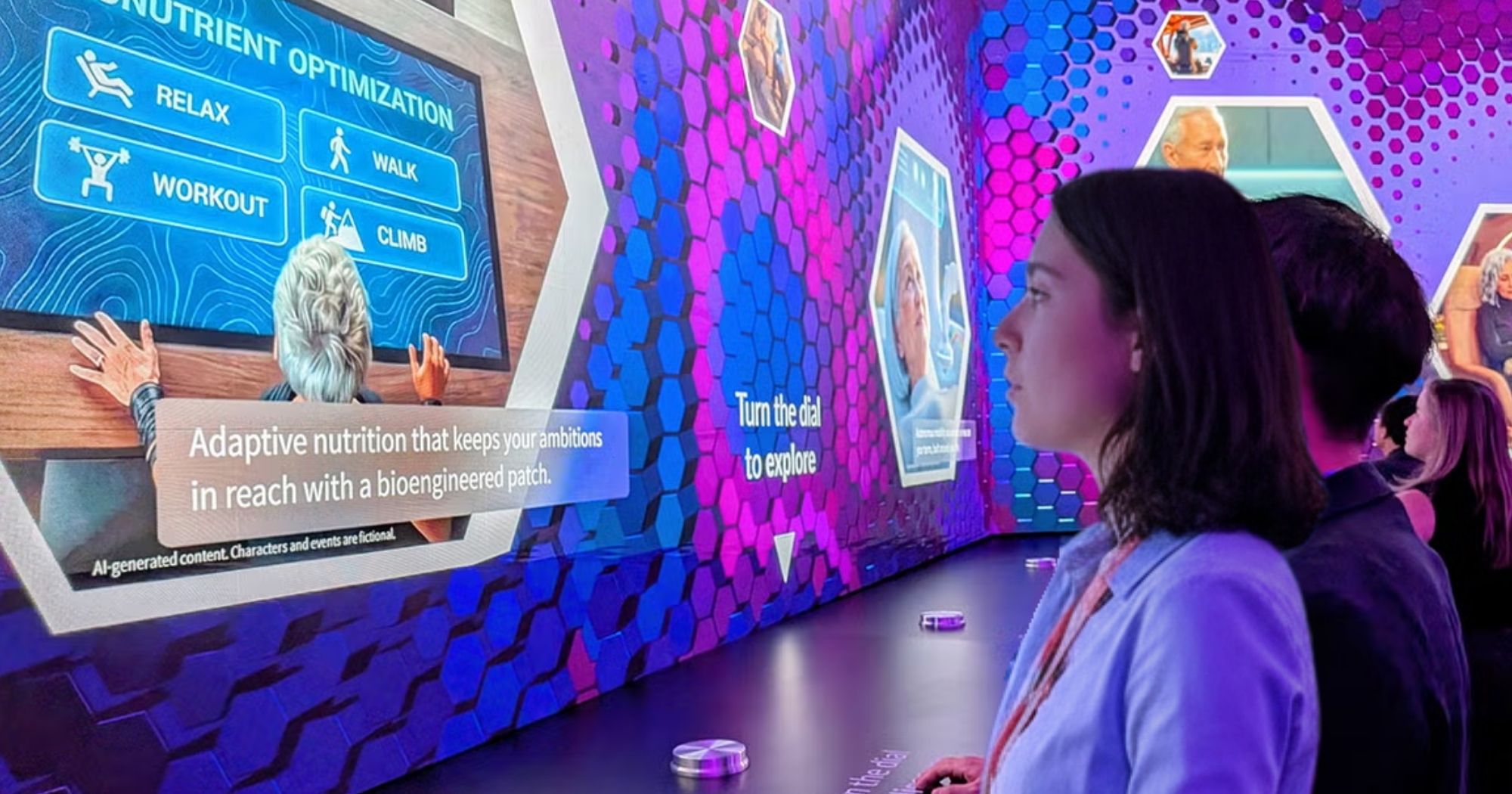

AI as an Interface (Ambient Intelligence)

AI is fading into the background, embedded into environments rather than front-and-center on screens. This “calm tech” approach prioritizes frictionless experiences that surface intelligence only when it’s needed.

Jensen Huang’s Signal: Why 2026 Is Different

In his CES keynote, Nvidia CEO Jensen Huang described the shift from reactive AI tools to physical, agentic systems in detail.

In 2024, agentic AI systems began to emerge. In 2025, they started to proliferate, solving real problems by reasoning, planning, simulating outcomes and using tools dynamically. In 2026, those systems will move from experimentation to scale.

Huang emphasized that physical AI requires two kinds of intelligence working together:

- AI that can act in the world

- “AI physics,” models that understand the laws of nature governing that world

This is why simulation, digital twins and synthetic data are becoming foundational. Companies like Nvidia and Siemens are compressing years of testing into weeks by training AI in virtual environments before deployment.

Equally important was Huang’s focus on open models. Open innovation has removed access as the bottleneck. Startups, enterprises, researchers and entire countries can now participate simultaneously. As a result, competitive advantage is shifting from who has the best model to who executes best.

His architectural point matters for every CEO: Future applications are built on AI. They are inherently multimodal, multi-model, hybrid cloud and edge-native. Intelligence must be customizable to your domain while staying at the frontier.

Lessons Learned From 2025

Let’s take a quick look at companies that pulled ahead in 2025. They shared a few traits:

- Focus beats ambition. Winners solved one painful problem deeply instead of pitching broad platforms.

- Buying usually beats building. Many underestimated the cost and governance required to run AI in production.

- Vertical AI pulled ahead. Industry-specific understanding mattered more than raw model power.

- Distribution mattered more than model quality. AI embedded in existing workflows outperformed standalone tools.

Where companies stumbled was just as revealing. Many tried to apply AI to broken processes, while others fed poor data into advanced models. Too many led with technology instead of strategy, while governance lagged behind.

What Changes in 2026

Several shifts are already underway:

- Every industrial company becomes an AI company, or falls behind

- Small teams create outsized value as AI increases leverage

- Open models accelerate adoption; execution becomes the constraint

- Physical AI reaches deployment scale, not just pilots

- Infrastructure, including compute, networking and power, becomes a competitive limiter

The Bottom Line

The companies entering 2026 with momentum are the ones that learned how to operationalize AI, not just talk about it. Physical and agentic AI are no longer future concepts; they are being deployed where seconds matter, outcomes are measurable and failure is costly.

In an AI-driven economy, sloppy execution isn’t just inefficient. It’s unforgiving.

2026 will be the year results are measured and matter.

CEO Checklist: Positioning for AI in 2026

- Start with outcomes, not tools

- Fix workflows before automating them

- Audit your data now; many AI failures are data failures

- Buy strategically, build selectively

- Invest in governance early; trust accelerates adoption

Earning Media Coverage in the Age of Vertical AI

Over the past year, the AI media narrative has shifted. “We’ve moved away from broad claims about what AI might do someday and toward practical conversations about what AI is already doing inside specific industries,” said Brent Shelton, Bospar VP of Media Relations.

That shift explains why vertical AI is gaining ground so quickly; how companies pitch, position and earn coverage for AI innovation must evolve alongside it.

For a long time, horizontal AI platforms dominated headlines by promising scale and universality. That story worked when the market was still exploring possibilities. Today, buyers and reporters are far more skeptical. They’re looking for proof, constraints and outcomes. They want evidence of what AI is actually doing, not what it might do someday.

Vertical AI is outperforming horizontal AI in media

Vertical AI delivers all three because it’s built around a defined industry, known workflows and real operational realities. Whether in healthcare, retail, manufacturing or financial services, vertical AI speaks the language of its users in a way general-purpose platforms rarely do. It offers solutions to that industry’s real-world challenges.

Trust has become a defining factor in coverage. Enterprises are no longer experimenting casually with AI; they’re asking whether a system understands regulatory requirements, risk thresholds and the consequences of being wrong.

“Vertical AI earns credibility faster because it narrows its focus and shows real domain awareness,” Shelton notes. For journalists, that credibility matters. Editorial decisions increasingly hinge on whether a technology is safe, reliable and already embedded in day-to-day work, not just technically impressive.

ROI is another reason vertical AI stories resonate. Generic productivity claims have faded into background noise. What cuts through now are concrete metrics tied to existing business metrics: reduced downtime, faster claims processing, improved conversion rates, lower fraud or fewer manual steps. They are easier for reporters to explain and readers to understand. Vertical AI naturally maps to those results, enabling compelling stories without overselling the technology.

Data realities also shape the narrative. The most valuable enterprise data isn’t public or standardized; it lives inside legacy systems and industry-specific environments. Vertical AI companies are designed to integrate with that complexity rather than ignore it. From a media perspective, this opens the door to more grounded discussions about data quality, governance and why deploying AI responsibly at scale is hard. Those conversations feel authentic, and authenticity travels further with today’s press.

All this changes what breaks through. High-level platform messaging and abstract model discussions are losing effectiveness. Reporters are far more responsive to specific workflows, named customer use cases and operational leaders who can speak from experience. Stories that acknowledge constraints, such as regulation, legacy infrastructure and risk tolerance, tend to feel more credible and, ultimately, more newsworthy.

The takeaway for tech-focused PR teams is straightforward. Vertical AI demands sharper storytelling, fewer sweeping claims and a stronger emphasis on how AI fits into real systems and real jobs. “The strongest coverage today comes from showing how AI quietly improves essential work, not from promising disruption,” states Shelton.

Vertical AI wins media attention not by being louder or flashier, but by being grounded, credible and demonstrably useful. An effective media strategy must reflect that shift.

Key takeaways:

- Vertical AI resonates because it’s grounded in present-day impact within specific industries

- Credibility, trust and real-world deployment drive editorial decisions

- ROI-based stories with metric-driven outcomes cut through the noise

- Data realism matters in AI storytelling, demonstrating authenticity